Superagent recently launched!

Founded by Alan Zabihi & Ismail Pelaseyed

AI agents introduce new attack surfaces that traditional security practices don’t cover:

Without protection, agents can leak customer data or trigger destructive actions that impact your product and your users.

At the core is SuperagentLM, their small language model trained specifically for agentic security. Unlike static rules or regex filters, it reasons about inputs and outputs to catch subtle and novel attacks.

Superagent integrates at three key points:

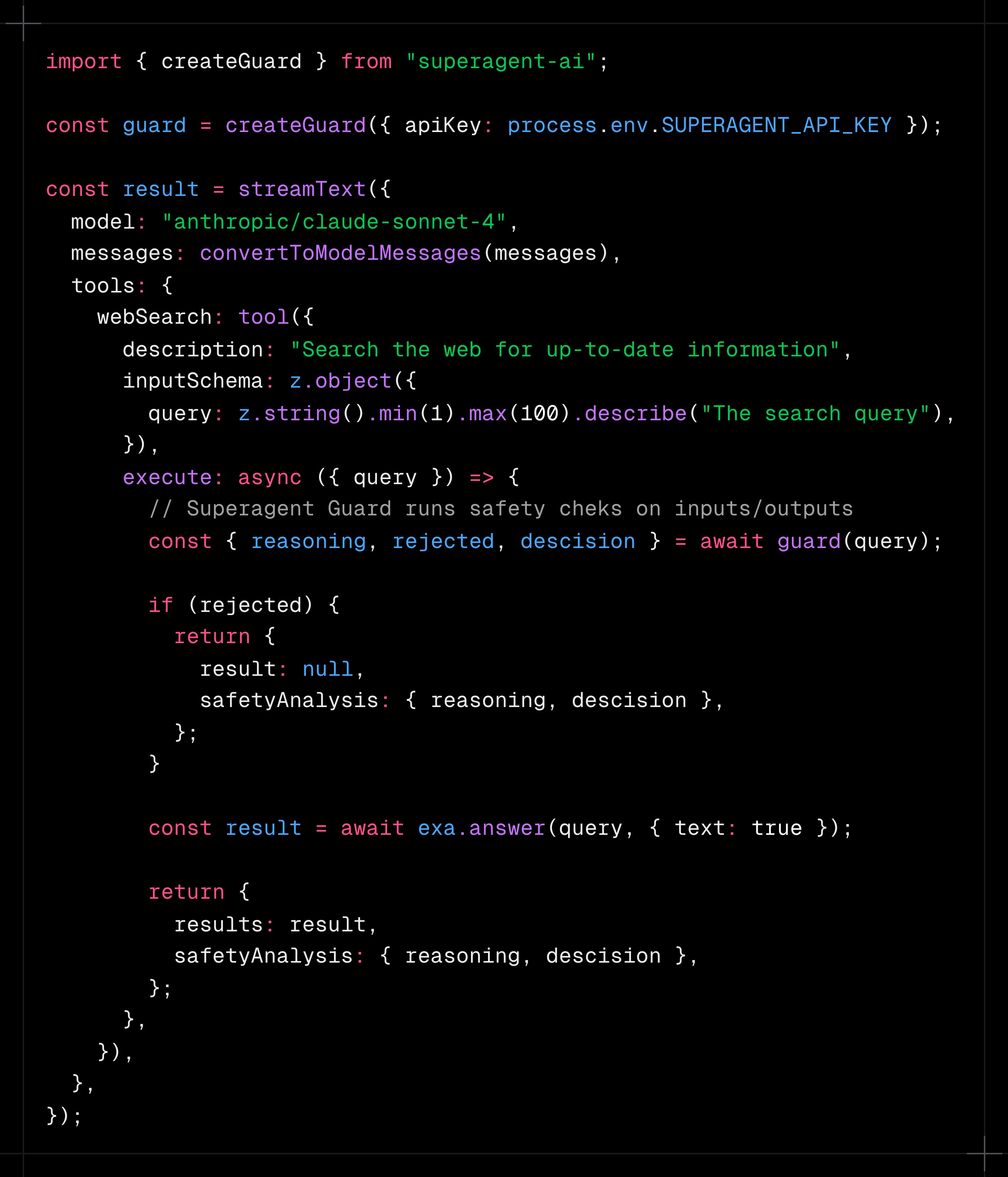

Here’s a quick example of how to use it with Exa (YC S21):

Every request is inspected in real time. Unsafe ones are blocked. Safe ones go through — with reasoning logs you can audit later.

They have been working closely with builders of AI agents for the last couple of years, building tools for them. What they noticed is that many teams are basically trying to system-prompt their way to security. Vibe security (VibeSec) obviously doesn’t work.

Some of the most popular agentic apps today are surprisingly unsafe in this way. So they decided to see if they could fix it. That’s the motivation behind Superagent: giving teams a real way to ship fast and ship safe.

They would love your feedback: what’s your biggest concern about running agents in production? Book a call or drop a comment!